visit

Comparing Apache Kafka with Oracle Transactional Event Queues (TEQ) as Microservices Event Mesh by@paulparkinson

503 reads

Comparing Apache Kafka with Oracle Transactional Event Queues (TEQ) as Microservices Event Mesh

by Paul ParkinsonSeptember 13th, 2021

Too Long; Didn't Read

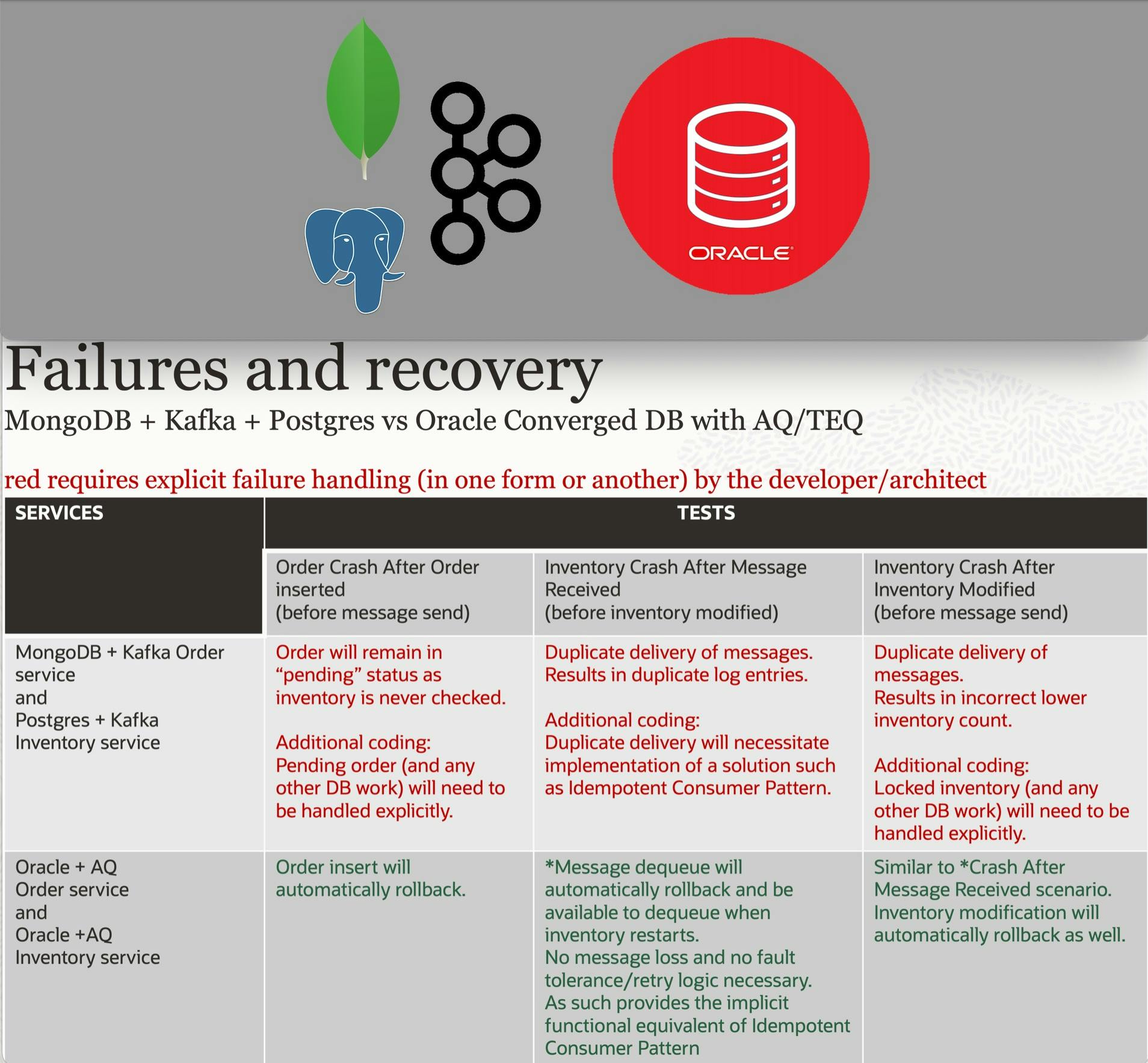

As opposed to Kafka, Oracle Transaction Event Queues provide exactly-once delivery and atomicity between database and messaging activities without the need for the developer to write any duplicate-consumer code nor use distributed transactions, etc.*(matrix above is referred to in the blog below)

Event Queues

Transaction Event Queues

- The Order service places an order (inserted in the database via JSON document with the status "pending") and sends an "orderPlaced" message to the Inventory service (two operations in total)

- The Inventory service receives this message, checks and modifies the inventory level (via a SQL query), and sends an "inventoryStatusUpdated" message to the Order service (three operations in total).

-

The Order service receives this message and updates the status of the order (two operations in total). A matrix of failures (occurring at three selected points in the flow) and recovery scenarios comparing MongoDB, PostgresSQL, and Kafka against Oracle Converged DB with Transactional Event Queues/AQ are shown in the table at the begining of this blog.

This article was

L O A D I N G

. . . comments & more!

. . . comments & more!